Why ChatGPT’s a big attraction for cybercriminals – ET CISO

https://etimg.etb2bimg.com/thumb/msid-98002007,imgsize-34806,width-1200,height-628,overlay-etciso/why-chatgpt-s-a-big-attraction-for-cybercriminals.jpg

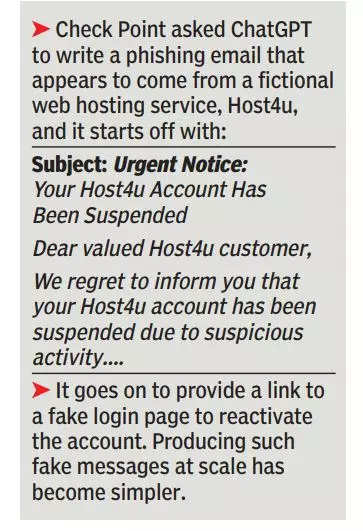

Sven Krasser, SVP and chief scientist at CrowdStrike, says generative AI models like ChatGPT are enabling spear phishing at scale. Spear phishing is where an email or electronic communications scam is targeted towards a specific individual or organisation. A basic knowledge of coding and minimal resources is all it takes to create realistic phishing emails.

AI and natural language processing (NLP) systems have also reached a stage where humans find it difficult to discern machine-generated prose from human-written ones in casual conversations. Synthetic media such as DeepFakes, Krasser says, allow an adversary to appear and sound like a trusted person on a video call.

Chester Wisniewski, field CTO applied research at Sophos, who has been playing with ChatGPT since its public availability, says it is easy to convince the tool to assist with creating convincing phishing lures and respond in a conversational way that could advance romance scams and business email compromise attacks. Cyber criminals are also breaching ChatGPT’s access modes through bots, so that they cannot be identified.

Access to ChatGPT is based on the user’s IP address, payment cards, and phone numbers. But Check Point’s research team notes active chatter in underground forums disclosing how to use OpenAI’s API to bypass these barriers. “This is mostly done by creating Telegram bots that use the API, and these bots are advertised in hacking forums to increase their exposure,” Sergey Shykevich, threat group manager at Check Point Software, says.

Sundar Balasubramanian, MD for India at Check Point Software, says the company has found hacking forums on the dark web attempting to use programs like ChatGPT to frame phishing lures that sound very genuine. “Miscreants are even using it in creative ways, like developing crypto currency payment systems with real-time currency trackers,” he says. In effect, they try to get people to invest in crypto currencies that do not even exist.

Jhilmil Kochar, MD of CrowdStrike India, says ChatGPT can also serve as an upgrade to malware-as-a-service. So, instead of getting a person in, say, Russia, to write malware for you, you can ask ChatGPT to automatically do this.

The best way to tackle ChatGPT-related threats would be to train and deploy one’s own AI engines to identify malicious requests, and for people to stay alert to phishing scams and social engineering attacks, and be more wary of suspicious emails and click-invites. Balasubramanian says an organisation could also implement authentication and authorisation in order to use the OpenAI engine. This will limit attackers’ ability to misuse the ChatGPT-based chatbots you may have.

Kochar says it’s important to invest in threat hunting, increase cyber education of employees, and use ML-based next generation endpoint detection systems.

Firewall Security Company India Complete Firewall Security Solutions Provider Company in India

Firewall Security Company India Complete Firewall Security Solutions Provider Company in India